How to Test Kubernetes Deployment Degradation When RabbitMQ Is Down

Do you know how your application will perform if your RabbitMQ cluster goes down?

In this post, we will outline how you can proactively test your systems to validate graceful degradation when RabbitMQ is unavailable. You will learn why this is essential for system reliability to conduct testing like this and how to run a chaos experiment to ensure your systems can handle this type of failure.

What is RabbitMQ and Why is Graceful Degradation Essential?

Many teams use RabbitMQ, an open-source messaging and streaming broker, to coordinate the data communication for their applications. It’s a vital message broker in many architectures, especially within Kubernetes environments where it facilitates asynchronous communication between microservices.

The Role of RabbitMQ in Kubernetes

In a Kubernetes-native architecture, microservices need a way to communicate without being tightly coupled. RabbitMQ functions as a post office for your services. It manages workloads, enables scalable asynchronous operations, and ensures messages are delivered reliably.

Common use cases include:

- Background Job Processing: Offloading long-running tasks like generating reports or sending emails, so the main application remains responsive.

- Event-Driven Architectures: Allowing services to react to events happening in other parts of the system without direct dependencies.

- Inter-Service Communication: Managing the flow of data between dozens or even hundreds of microservices.

Defining Graceful Degradation

Graceful degradation is a system’s ability to maintain limited but essential functionality when a critical dependency fails. Instead of a catastrophic failure that brings everything down, the system sheds non-essential features while keeping core services online.

When a RabbitMQ cluster becomes unavailable, the application should continue to operate with reduced capability, protecting the user experience from a complete outage, and the outage should be detected and flagged by your observability tool.

Consider an e-commerce application. If RabbitMQ, which processes new orders for the shipping department, goes down, graceful degradation means customers may still browse products and add items to their cart. They just might see a temporary message that order processing is delayed, but they are not met with an error page.

The Importance of Proactive Validation

How do you know if your Kubernetes deployment will degrade gracefully? Without testing it out, you are relying on hope. By running an experiment proactively, you can safely test how your system responds and make improvements before an outage occurs.

This type of validation is critical for several reasons:

- System Reliability: Verifying resilience prevents minor issues from escalating into major incidents that breach Service Level Objectives (SLOs).

- User Experience: It protects users from disruptive outages, maintaining their trust in your service.

- Operational Readiness: It prepares your team for real-world failures, reducing Mean Time To Recovery (MTTR) and the stress of firefighting incidents in production.

Now, let’s explore how to create and run an experiment for this use case.

How to Run an Experiment to Test RabbitMQ Failure

The most effective way to validate graceful degradation is with chaos engineering. By intentionally injecting a controlled failure, you can observe how your system behaves and identify weaknesses before they impact users. A chaos experiment provides the proof you need to build true system resilience.

Here’s how you can design and execute an experiment to simulate a RabbitMQ failure.

Step 1: Define Your Hypothesis and Steady State

Every good experiment starts with a clear hypothesis. This is a statement about what you expect to happen. Before you start, you must also understand your system’s “steady state,” or its normal behavior.

- Hypothesis: “When the RabbitMQ service is unavailable, the primary application will remain operational, and user-facing services that do not depend on messaging will continue to function without error. Services dependent on RabbitMQ will fail predictably without causing a cascading failure.”

- Steady State: Identify Key Performance Indicators (KPIs) to monitor, such as application response time, error rates (HTTP 5xx), and resource utilization (CPU/memory). This baseline is essential for measuring the impact of the experiment.

Step 2: Design the Chaos Experiment

Next, design the experiment to test your hypothesis. The goal is to simulate a RabbitMQ outage in a way that is realistic and contained.

- Experiment: Block all network traffic to and from the RabbitMQ pods within your Kubernetes cluster. This accurately mimics a network partition or a complete service failure.

- Blast Radius: Limit the experiment’s scope to a specific namespace or a subset of services. This minimizes potential impact, especially when running tests in a production environment. For example, you could target only the services (or subset of services) in the dev or staging namespace.

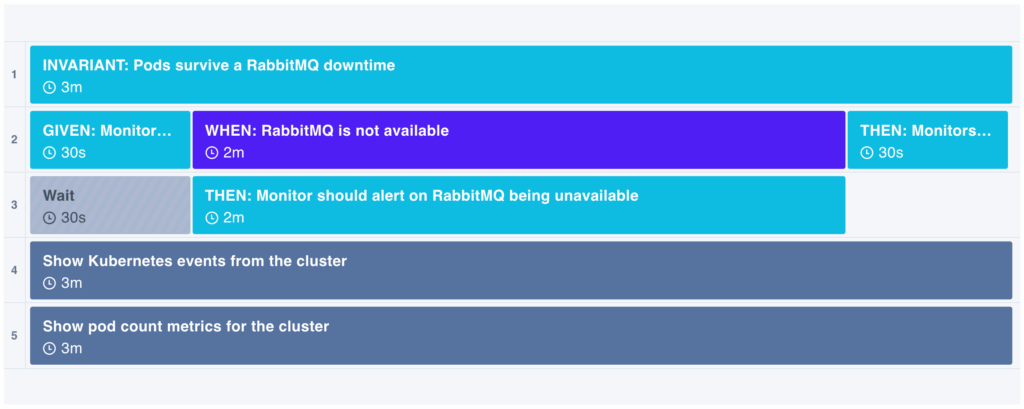

Here’s an example of the steps for this type of experiment:

Steadybit Experiment Template: Graceful Degradation of Kubernetes Deployment While RabbitMQ Is Down

You can find this experiment template in the Reliability Hub, Steadybit’s open source library of chaos engineering components, including over 80 similar experiment templates.

Step 3: Execute and Monitor the Chaos Experiment

With your plan in place, it’s time to run the experiment. If you’re using a tool like Steadybit, you’ll be able to watch the experiment execute in real-time.

As the experiment runs, here are some things to keep in mind:

- Monitor application logs: Look for connection errors, retries, or unexpected exceptions. Are services entering a crash loop trying to reconnect?

- Check non-dependent features: Verify that core functionalities unrelated to messaging are still working as expected. Can users log in? Can they navigate the site?

- Watch your dashboards: Keep an eye on your monitoring tools. Are error rates spiking? Is latency increasing for unrelated services?

Step 4: Analyze the Results and Make Improvements

Once the experiment is complete, analyze the outcome. Did the system behave as you hypothesized?

- Did it degrade gracefully? If yes, congratulations! You have validated a key aspect of your system’s resilience.

- Did it fail unexpectedly? This is also a valuable outcome. Perhaps a health check on an unrelated service failed because it had an implicit dependency on RabbitMQ. Or maybe a service went into a crash loop, consuming excessive resources and threatening the stability of its node.

Based on these findings, you can take action. Remediation might involve implementing circuit breakers to stop services from endlessly retrying failed connections, adding fallback mechanisms, or improving error handling to fail more gracefully.

Building Resilience and Operational Readiness

Running chaos experiments to validate graceful degradation is not just a technical exercise; it delivers tangible business value and strengthens your team’s skillset.

With one proactive test, you can find and fix issues in a controlled environment. With experiments run regularly or integrated into a CI/CD pipeline, you can systematically eliminate potential causes of outages. This practice is fundamental to meeting and exceeding aggressive uptime targets like 99.99%.

If you want to get started running chaos experiments with a tool that makes it easy, schedule a demo for your team or start a free trial today. Build more resilient systems one experiment at a time.