What is Chaos Engineering?

Chaos Engineering is the proactive approach of testing and strengthening resilience across applications and services by deliberately introducing controlled disruptions. These disruptions reveal weaknesses and behaviors under real-world conditions like high traffic, unexpected outages, or resource bottlenecks.

While there is a long history of intentional fault injection in software development, the discipline of chaos engineering was really popularized in 2010 by the reliability team at Netflix with their use of an internal tool called Chaos Monkey, which would randomly turn on and off instances in production.

Since then, reliability teams around the world have adopted new practices, technologies, and tools to implement this type of reliability testing in a safe and strategic way.

How does Chaos Engineering work?

The goal of chaos engineering is to learn about your systems by running experiments, either in pre-production or production environments. Like any experiment, there are standard steps you would follow to ensure that you are learning from your efforts:

By following a systematic process and challenging assumptions, teams are better able to generate actionable insights into system performance.

Step 1: Define Expectations

Start by identifying your system’s baseline behavior using metrics like latency, throughput, or error rates. Observability or monitoring tools are critical in providing visibility into these types of metrics, so you can develop specific benchmarks. If you haven’t configured a monitoring tool, that is often a good first step before diving deeper into chaos engineering.

Step 2: Hypothesize Outcomes

Next, create a hypothesis for how you expect your system to behave when a specific fault or stress is introduced. This hypothesis should include a trackable metric, such as maintaining a certain performance standard or a clear application functionality (e.g. “If a primary database fails, read replicas will maintain query availability.”)

A successful experiment is an experiment where the hypothesis was correct (e.g. “We expect the average CPU utilization to not exceed 50% at any point”). A failed experiment means that the hypothesis wasn’t correct and adjustments need to be made.

Step 3: Run an Experiment

Manually run your experiment by introducing your test conditions. Typically, this involves artificially interrupting services, adding latency spikes, stressing CPU or memory, or simulating an outage. We’ll explain how teams actually inject these failures in a section below, but it typically requires using a script or ClickOps to make these changes, or utilizing a chaos engineering platform to orchestrate experiments.

Step 4: Observe Behavior:

As your experiment runs, you should be able to use monitoring tools to compare the actual performance against expected outcomes. For example, if you expect service to somewhat degrade upon injecting latency, you can monitor to see if the degradation actually exceeds your expectations or not. Once you review the logs, you should be able to determine if your experiment was a success or failure, or in other words – if your hypothesis was correct.

Step 5: Refine and Repeat

Now that you have run your experiment, you can make use of the results.

If your hypothesis was correct, then you have accurately predicted how your system would behave. It may have even exceeded your expectations. If you predicted a positive reliability result, then you should have more confidence in your systems now.

If your hypothesis was proven incorrect and your system was unable to perform adequately under the conditions, then you have likely revealed a reliability issue that could be a liability in production if the experiment conditions were to become a reality. Once you have made changes to address the issue, you can then rerun the experiment to validate that it is now successful.

A successful experiment validates that, at the time of running the experiment, the hypothesis was correct. To ensure that you maintain this result over time, teams will pick certain experiments to automate and incorporate in their CI/CD workflows.

Key principles of Chaos Engineering

Chaos Engineering can be an intimidating term for stakeholders who are not familiar with the practice. “We have enough chaos already!” is a common response.

To implement chaos engineering and reliability testing in an effective way, it’s important to follow some key principles:

Start Small and Focused, Then Expand

The best way to get started is by selecting a relatively small target and limiting the impact of your experiment. For example, instead of running experiments in production, lower the potential negative impact by running experiments in a non-production environment. Instead of running an experiment that will impact a full cluster, target a subset. Instead of a critical service, start with a service that has fewer downstream impacts.

Start small and once you have seen success with your experiments, then you can slowly expand to incorporating multiple services in your experiments.

Communicate Early and Often Across Teams

With each new experiment, there is an opportunity to learn about how systems behave and respond under certain conditions. Those sociotechnical systems include both the applications and the software teams behind them.

Before running an experiment, it’s critical to proactively communicate ahead of time so everyone has sufficient visibility and understands the potential impacts. Stress your applications – not your people. With proactive communication, you can also educate teams internally on why you are running experiments, key insights from the results, and what changes are needed to boost reliability.

Experiments can be leveraged as an opportunity for SRE teams to communicate to Dev teams about reliability issues and make sure that resilience is prioritized across the board. For example, many Site Reliability Engineering (SRE) teams run events called GameDays where they run specific experiments and collaborate with developers to run root cause analyses and improve their incident response processes.

Read More: Principles of Ethical Chaos Engineering

Enable Teams to Run Experiments Easily

If an organization wants to scale reliability testing and chaos engineering across applications and teams, it’s critical that they help make creating and running experiments as simple, fast, and automated as possible. There are commercial chaos engineering platforms like Steadybit that are designed to provide teams with the ability to quickly scan environments for targets, identify reliability gaps, and launch experiments with a drag-and-drop timeline editor. These experiments can then be incorporated into automated development workflows to validate certain reliability standards without burdening development teams with time-intensive processes. When there is strong enablement for chaos engineering programs, then real adoption across teams is much more likely.

What are examples of chaos experiments?

There are a wide range of possible chaos experiments you could run to test your systems. In this section, we’ll outline common experiment types and list specific examples for each.

Dependency Failures

With the rise of microservices, systems are more reliant on internal and external dependencies to fulfill requests effectively. Experiments that simulate service failures allow teams to see how their systems respond to outages.

Here are experiment examples:

- Simulate increased latency or packet loss to test service response times and throughput

- Emulate the unavailability of a critical service to observe the system’s resilience and failure modes

- Introduce connection failures or read/write delays to assess the robustness of data access patterns and caching mechanisms

- Mimic rate limiting or downtime of third-party APIs to evaluate external dependency management and error handling.

Resource Constraints

Each system has resource limitations on things like CPU, memory, disk I/O, and network bandwidth. Even with cloud providers and autoscaling options, it’s useful to run experiments that stress your systems to see how resource constraints impact performance.

Here are experiment examples:

- Simulate memory leaks or high memory demands to test the system’s handling of low memory conditions.

- Increase disk read/write operations or fill up disk space to observe how the system copes with disk I/O bottlenecks and space limitations.

Network Disruptions

Various network conditions that can affect a system’s operations, such as outages, DNS failures, or limited network access. By introducing these disruptions, the experiments can help show how a system responds and adapts to network unreliability.

Here are experiment examples:

- Introduce DNS resolution issues to evaluate the system’s reliance on DNS and its ability to use fallback DNS services.

- Introduce artificial delay in the network to simulate high-latency conditions, affecting the communication between services or components.

- Simulate the loss of data packets in the network to test how well the system handles data transmission errors and retries.

- Limit the network bandwidth available to the application, simulating congestion conditions or degraded network services.

- Forcing abrupt disconnections or intermittent connectivity to test session persistence and reconnection strategies.

Read More: Types of Chaos Experiments (+ How To Run Them According to Pros)

If you want to see even more specific experiment templates, you can view over 50 examples in the Reliability Hub, an open source library sponsored by Steadybit.

How do you measure the business value of chaos experiments?

So far, we’ve defined chaos engineering, explained the key principles, and outlined examples, but how can you make a business case for prioritizing chaos engineering over other initiatives?

While there are lots of ways to calculate an ROI, these are the most common approaches:

Preventing Costly Incidents in Production

If you have applications that are associated with revenue or relied upon by customers, downtime is a tangible cost. You can project your current incident costs by calculating:

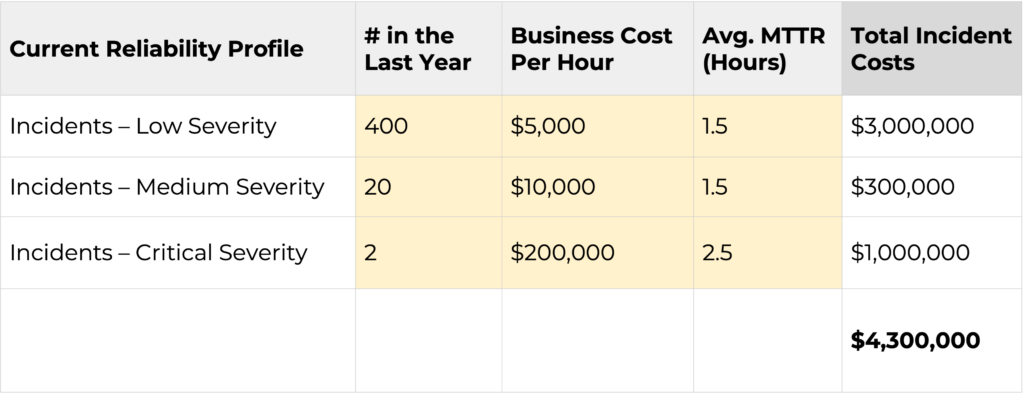

# number of incidents in the last year X cost of each hour of downtime X average MTTR = annual incidents costs

If you break it out by incident severity, it could look something like this:

With this approach, you can then project savings by estimating a reduction in the number of production incidents per year due to proactive reliability testing. In the above example, if implementing chaos engineering was able to help reduce incidents by 20%, then that would result in annual savings of $860,000.

Finding and Remediating Reliability Risks

If you have applications with outstanding reliability issues, they carry a potential risk of downtime. Even if your organization hasn’t experienced significant downtime yet, there is meaningful value in finding and fixing these issues and reducing the overall risk of outages.

If you utilize the incident cost calculation above, you can determine a value for each reliability risk by severity. For example, if by running chaos experiments, you are able to identify a critical severity issue and fix it, you have prevented an issue that could have resulted in $500,000 in damages if it had occurred in production.

As you run more experiments and scale your testing across applications, you will uncover your existing reliability issues and streamline your ability to remediate risk across your systems. If you run 100 experiments and 10 of them reveal reliability issues that need to be fixed, you can fix all or a subset of those issues and document the potential business liabilities that have now been addressed.

Improving Incident Response and Operational Readiness

If teams rely on incidents occurring in production to test run books, run root cause analysis, and remediate issues, they are missing the opportunity to practice and optimize their response in a low-stress simulated scenario.

Running chaos experiments enables organizations to continually sharpen their incident response processes, validate that runbooks are up-to-date, and improve their Mean Time To Resolve (MTTR) issues.

Fidelity Investments recently presented on how they scaled chaos engineering efforts across their applications. As they expanded their “chaos coverage”, they were able to meaningfully decrease their MTTR.

You can see how MTTR decreased as Chaos Coverage increased in this presentation at a recent AWS re:Invent conference:

What tools do people use for Chaos Engineering?

Open Source Chaos Engineering Tools

There are many open source tools for chaos engineering like Chaos Monkey, LitmusChaos, Chaos Mesh, and Pumba. For the most part, these open source tools are technology specific and good for initial experimentation.

For anyone trying to rollout chaos engineering and reliability testing at a multi-team or organization-wide level, commercial tools offer easier deployment, better user experience, and enterprise-grade security features.

Commerical Chaos Engineering Tools

Some companies build their own custom chaos engineering solutions, but then struggle with the maintenance and development of new functionality. Instead of building a custom solution, many teams will opt to buy a commercial platform.

The three leading commercial tools are Steadybit, Gremlin, and Harness Chaos.

If you are interested in evaluating a commercial chaos engineering platform, you’ll find that Steadybit is easiest to use, customize, and deploy due to its open source extension framework and timeline-based experiment editor.

How does Chaos Engineering integrate with Observability?

Observability tools like DataDog, Dynatrace, Honeycomb, Grafana, New Relic, and more offer organizations real-time insights and monitoring into their system performance.

For example, DataDog captures data from servers, containers, databases, and third-party services, providing comprehensive visibility for cloud-scale applications. When integrated with a chaos engineering tool like Steadybit, it tracks the impact of chaos experiments in real time, enabling teams to correlate chaos events with changes in system metrics and logs.

Similarly, New Relic delivers robust application performance monitoring with a focus on distributed systems, offering detailed insights into applications, infrastructure, and digital customer experiences.

Users can customize their observability environment with features like custom applications and dashboards, allowing for tailored analyses of chaos experiments and greater control over system health.

To learn from chaos experiments, you need the right data. It’s important to have a tight integration between your chaos engineering tool and monitoring tools so teams can track and act on key metrics like latency and error rates.

For example, Steadybit integrates with monitoring tools to tracks metrics like:

- Latency: Measure response times under stress.

- Error Rates: Identify transaction failures.

- Resource Utilization: Monitor CPU, memory, and network usage.

Challenges in Rolling Out Chaos Engineering

While Chaos Engineering delivers tremendous value, teams often face challenges when adopting chaos engineering tools. Steadybit is uniquely designed to address these obstacles, making it easier to embrace chaos experiments and integrate them into daily workflows.

Cultural Resistance

Many teams hesitate to embrace Chaos Engineering due to fears of causing unnecessary disruptions or the perception that it adds extra work.

Steadybit tackles this head-on with a user-friendly platform that demystifies the process.

By offering intuitive workflows and safety mechanisms, Steadybit helps teams see Chaos Engineering as a manageable and valuable practice rather than a risky or overwhelming initiative.

Resource Constraints

Organizations often worry about the time and effort required to implement Chaos Engineering.

Steadybit reduces this burden by enabling targeted, focused experiments that deliver high-value insights without consuming excessive resources.

The platform’s integration with existing tools and workflows ensures minimal disruption while still providing actionable data.

Technical Complexity

The technical complexity of creating and managing chaos experiments can be daunting, particularly for teams new to the practice. Steadybit simplifies the entire process, from designing experiments to analyzing results.

Predefined scenarios, customizable templates, and real-time observability tools lower the barrier to entry, enabling teams to focus on learning and improving rather than wrestling with implementation.

Getting started with Chaos Engineering

Starting with Chaos Engineering can seem complex, but Steadybit ensures a smooth and straightforward journey.

Here’s how to begin:

1. Define Critical Metrics

Identify and establish baseline metrics that represent your system’s steady state, such as latency, error rates, or throughput. These metrics will help you measure the impact of experiments and evaluate system health.

2. Start Small

Begin with a narrow scope to limit the potential impact. For example, test a single service or a non-production environment first. Steadybit’s platform helps you control the blast radius to ensure safe experimentation.

3. Experiment and Refine

Use the insights from initial experiments to strengthen your systems. Steadybit’s guided workflows and detailed observability features provide clear, actionable feedback, helping teams iterate and improve with confidence.

4. Scale Up Gradually

Once you’ve gained confidence in smaller experiments, expand the scope to include more critical systems or larger portions of your infrastructure. Steadybit makes it easy to scale chaos experiments safely.

Get Started: Ready to explore Chaos Engineering? Schedule a demo with Steadybit and transform your systems into resilience powerhouses.