How to Test Load Balancer Failover During an AWS Availability Zone Outage

If you are running applications on cloud infrastructure, an issue with your cloud provider could result in a costly outage. Are your systems ready for if an entire AWS Availability Zone (AZ) goes down?

Outage events like this often result in system downtime, distressed customer calls, and business operations grinding to a halt. But for some prepared teams, these incidents can be proof that their failover processes are working successfully.

Correctly configured load balancers could make a big difference. By setting up load balancers to respond automatically to AZ outages, you can trust that traffic will be rerouted to healthy instances in other zones without a single user noticing.

It takes both intentional failover design and continuous verification to achieve this level of system resilience. Just setting it up and assuming that it works correctly is a hope-based strategy.

In this post, we’ll explain how you can proactively test your load balancer’s cross-zone failover capabilities with chaos engineering to ensure true system resilience.

The Role of Load Balancers in Achieving High Availability

An Availability Zone (AZ) is a distinct cloud region, supported by separate, physical data centers that distribute the geological risk of any one location. This decentralized design is the foundation of high-availability architectures and common across cloud providers. In this post, we’ll focus on AWS specifically.

Load balancers, like AWS Application Load Balancer (ALB) or Network Load Balancer (NLB), sit in front of your services and distribute incoming traffic across multiple targets, such as EC2 instances or containers. In a well-architected system, these targets are spread across multiple AZs so there are still available resources available if any one AZ goes down.

In the scenario of an AZ outage, you would hope that your load balancer would run the following steps:

- Health Checks: The load balancer continuously sends health check requests to each registered target.

- Failure Detection: If any target fails to respond correctly, the load balancer marks it as unhealthy.

- Rerouting Traffic: When all targets within an entire AZ become unhealthy, as they would in an outage, the load balancer’s failover mechanism activates. It then stops sending traffic to the failed AZ and seamlessly reroutes traffic to the healthy targets in the remaining AZs. This ensures that even if your application has degraded performance, it still remains available and functioning for end users.

Why It’s Critical to Test Load Balancer Failover Processes

On paper, the failover process seems straightforward. But in complex, dynamic production environments, you can’t rely on the assumption or hope that systems will always respond as designed. Here’s why continuous verification is critical.

- Configuration Drift: Your architecture diagram might be perfect, but the reality often doesn’t line up perfectly. Misconfigurations in networking rules, security groups, or health check parameters can silently accumulate over time. These subtle changes can prevent the load balancer from correctly detecting failures or routing traffic, causing the failover to fail completely.

- Capacity Planning Issues: Let’s say the failover works, and traffic is redirected. Do the remaining AZs have enough capacity to handle the sudden influx? Without testing, you’re just guessing. A successful failover can still lead to performance degradation, increased latency, or a complete overload of the remaining instances if your auto-scaling policies aren’t tuned correctly.

- Dependencies and “Unknown Unknowns”: Complex systems hide complex dependencies. A failover might expose a critical service, like a database replica or a caching layer, that was only running in the failed AZ. This can trigger a cascade of failures that brings down your entire application, even though the initial load balancing worked.

- Impact on SLOs: A failed or even a slow failover directly impacts your uptime and availability Service Level Objectives (SLOs). This erodes user trust, harms your brand’s reputation, and can lead to costly violations of Service Level Agreements (SLAs).

Simulating an AWS AZ Outage with Chaos Engineering

The only way to know how your system will react to an AZ outage is to simulate one in a controlled manner. By using a chaos engineering solution like Steadybit, you can simulate this type of scenario safely.

Here’s how to build an experiment that validates your load balancer failover process during an AWS Availability Zone outage.

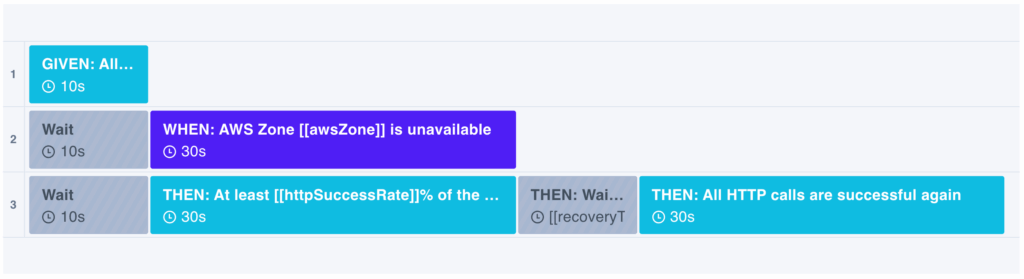

Step 1: Design the Chaos Experiment

A well-designed experiment starts with a clear hypothesis. This statement defines what you expect to happen during and as a result of the experiment.

- Hypothesis: “When we simulate an AWS AZ outage by blocking all network traffic to one zone, our load balancer will successfully detect the unhealthy targets and redirect all traffic to the healthy zones. Key business metrics, like error rate and latency, will remain stable.”

Next, you must precisely define the blast radius. You want to contain the failure to a specific, narrow set of targets to run a safe and meaningful experiment.

Steadybit’s query-based targeting makes this simple. You can select all targets within a specific AWS availability zone (e.g., us-east-1a) without needing to manually list hosts or containers.

Step 2: Simulate the AZ Outage

With your targets defined, you can configure the attack. To perfectly simulate an unreachable AZ, we use a Blackhole zone attack.

The Blackhole attack drops all network traffic to and from the targeted instances. This mimics the real-world scenario where an entire zone becomes inaccessible due to a network partition or other major failure. You can configure this Blackhole action to run for a certain duration while also running HTTP checks in parallel to gauge application functionality.

Here’s an example of this type of experiment design.

Steadybit Experiment Template: Load Balancer Covers an AWS Zone Outage

Before you run the experiment, you should also connect your chaos engineering tool with your observability tool so you can validate that alerts are being raised in real-time as you would expect.

Step 3: Run and Analyze the Results

Now, it’s time to run the experiment and observe your system’s behavior in real time. Watch your dashboards closely.

- Did the load balancer’s health checks correctly identify and mark the targets in the affected AZ as unhealthy?

- Was traffic successfully and quickly routed to the instances in the other AZs?

- Did your application’s error rate, throughput, and latency remain within acceptable limits?

- Did any downstream services experience issues due to hidden dependencies?

You can use these insights to improve and harden your system. You might discover a misconfigured health check, an under-provisioned auto-scaling group, or a critical dependency you never knew existed.

If your hypothesis was accurate, your experiment is a “success”. If your hypothesis was wrong, your experiment “failed” and you will need to review the results to either revise your hypothesis or improve your systems. You might discover a misconfigured health check, an under-provisioned auto-scaling group, or a critical dependency you never knew existed.

By finding and fixing reliability issues in a controlled experiment earlier in the software development lifecycle, you will be preventing future outages one experiment at a time.

Turning Chaos Experiments into Continuous Tests

Load balancers are fundamental to building highly available systems, but they are not a “set it and forget it” solution. Assuming that your failover mechanisms will work as expected is a risk your business can’t afford. You need to continuously test these types of processes to validate that your systems are truly resilient.

By running experiments on a schedule or with CI/CD automation, you can move from a reactive hope-based strategy to a proactive data-driven process that builds a culture of reliability. You’ll gain evidence that your systems can withstand one of the most common—and potentially damaging—failure modes in the cloud.

Ready to start running experiments? See how easy Steadybit makes it to create and run experiments that provide real insights on your system’s resilience. Schedule a demo or start a free trial today to put your load balancers to the test.

Get started today

Full access to the Steadybit Chaos Engineering platform.

Available as SaaS and On-Premises!

or sign up with

Book a Demo

Let us guide you through a personalized demo to kick-start your Chaos Engineering efforts and build more reliable systems for your business!