Behind the Scenes: Query Language Parsing

In this second part of the query language blog series, we look closely at the implementation of the lexer and parser.

In a previous blog post, we introduced Steadybit’s query language and how it can help you express specific targeting rules in complex environments. This blog post will look under the hood showing how the query language’s lexer and parser are implemented. We will learn how ANTLR is leveraged to construct Java and JavaScript lexers and parsers.

Desired Syntax

The query language is mainly intended to identify/find targets to execute actions (attacks, checks…) against them. What are targets, though? For Steadybit, targets are observed hosts, Kubernetes workloads, API endpoints and more. Targets carry attributes, e.g., Kubernetes deployments carry the deployment name and the namespace in which they are deployed. When identifying and finding targets to attack or check, it is beneficial to have full access to all the attributes and boolean algebra.

Of course, there is no need to reinvent the wheel regarding query languages. There are many suitable and frequently used variants out there. Based on our experience and customers’ feedback, we were looking for a syntax similar to

- SQL where clauses, e.g., k8s.deployment=checkout AND k8s.namespace LIKE ‘dev-%’ or the

- Lucene query language, e.g., k8s.deployment:checkout AND k8s.namespace:dev*

In the end, we settled for a variation of SQL where clauses. Now, to parsing this syntax!

Generator Selection

We could parse the syntax through manually crafted code, but this would be error-prone, hard to maintain and generally tedious. On top of this, we would have to support a parser in Java and JavaScript. In contrast, several highly capable, commonly used and mature solutions for parsing text are available. ANTLR is one of these solutions, and it is the one we chose.

ANTLR accepts lexer and parser grammars as input and generates lexers and parsers in various languages. Maven build plugins, IntelliJ IDE extensions, books, support articles, and more make ANTLR a good choice for simple and more advanced use cases.

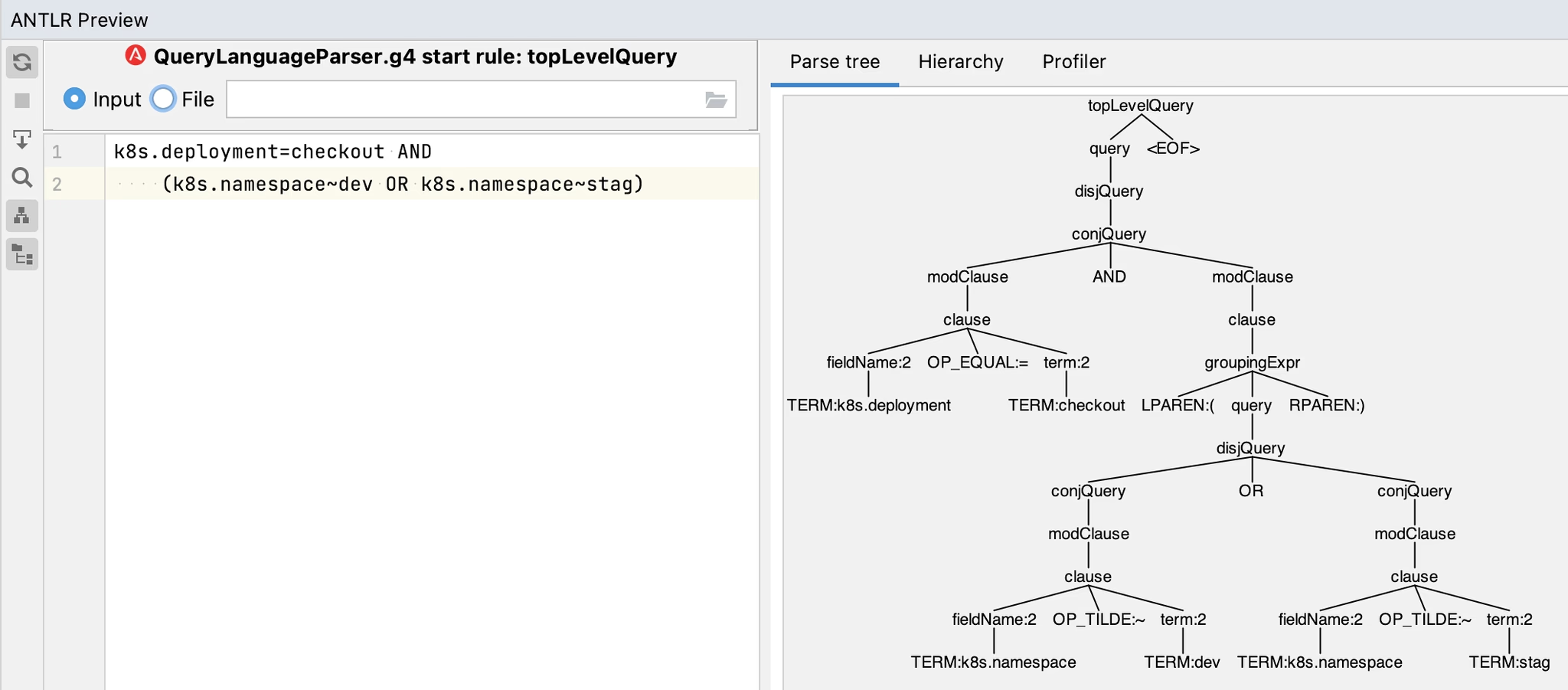

The screenshot above depicts the IntelliJ IDEA ANTLR v4 plugin you can install through the marketplace. You can use the input field on the left to define a text that the plugin will parse. The image on the right side presents the parse tree (sometimes called abstract syntax tree). When working with an ANTLR-generated parser, you will work with the parse tree through an object hierarchy.

Lexer and Parser Grammars

Authoring lexer and parser grammars can be very complex. Much too complex for a blog post such as this. Lucky for us, our query language is based on existing query languages! Also, thanks to ANTLR’s popularity, there are a lot of open source grammars we can use as inspiration! An SQL lexer and parser would have been our second choice since it comes with many additional capabilities we do not need. Consequently, we looked for an open-source Lucene query language lexer and parser that we could modify for our needs.

ANTLR has a handy collection of open-source grammars within their antlr/grammars-v4 repository. Among those, even an MIT-licensed Lucene query language lexer and parser! This enabled us to move more quickly and avoid common pitfalls, e.g., escaping, conjunction vs. disjunction precedence and parentheses logic. We adapted a few things here and there (using = instead of :), made the grammar more lenient (allowing lowercase AND and OR) and removed some syntax capabilities (number comparisons).

lexer grammar QueryLanguageLexer; AND : 'AND' | 'and' | '&&'; OR : 'OR' | 'or' | '||'; NOT : 'NOT' | 'not' | '!';

The snippet above shows the first few lines of our lexer’s grammar. Most of it is still strikingly similar to the Lucene query language lexer grammar. The following snippet shows the first few lines of our parser’s grammar. Again, most of it is still similar to the Lucene query language parser grammar.

parser grammar QueryLanguageParser;

options { tokenVocab=QueryLanguageLexer; }

topLevelQuery

: query EOF

;

query

: disjQuery?

;

disjQuery

: conjQuery ( OR conjQuery )*

;

Generating the Lexer and Parser Code

With the scariest work out of the way, we can now turn back to a common problem: Build definitions. We leverage Maven to build the component requiring the query language. Consequently, this is where we integrated the build steps.

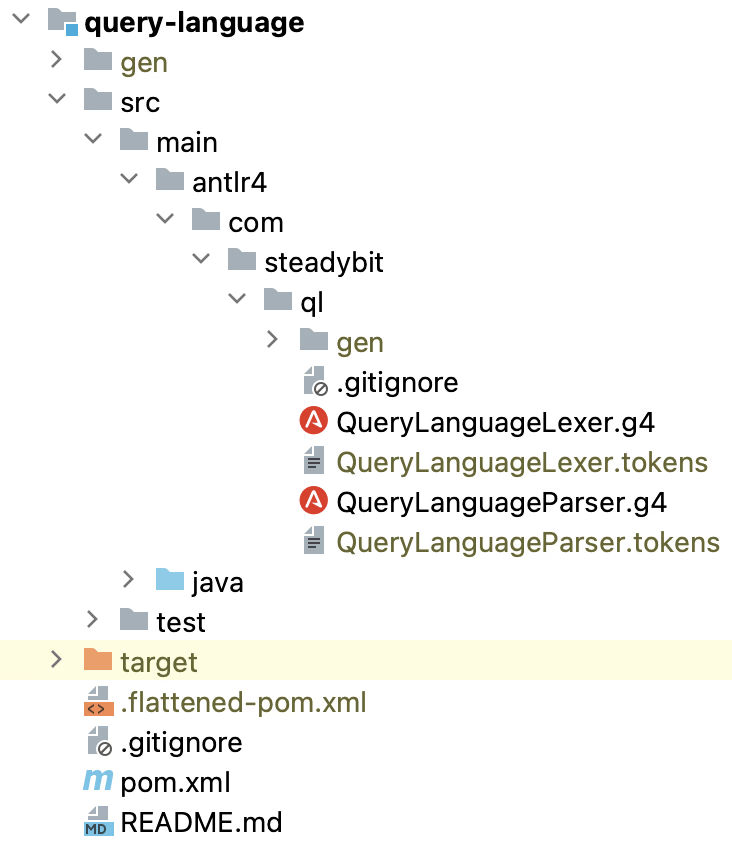

The image above shows our query-language module. This module contains the grammars, build logic for the generated Java lexer and parser and some wrappers around the generated code. To generate the lexer and parser code, we use the antlr4-maven-plugin, as shown below.

<plugin> <groupId>org.antlr</groupId> <artifactId>antlr4-maven-plugin</artifactId> <version>${antlr.version}</version> <configuration> <visitor>true</visitor> <listener>true</listener> </configuration> <executions> <execution> <goals> <goal>antlr4</goal> </goals> </execution> </executions> </plugin>

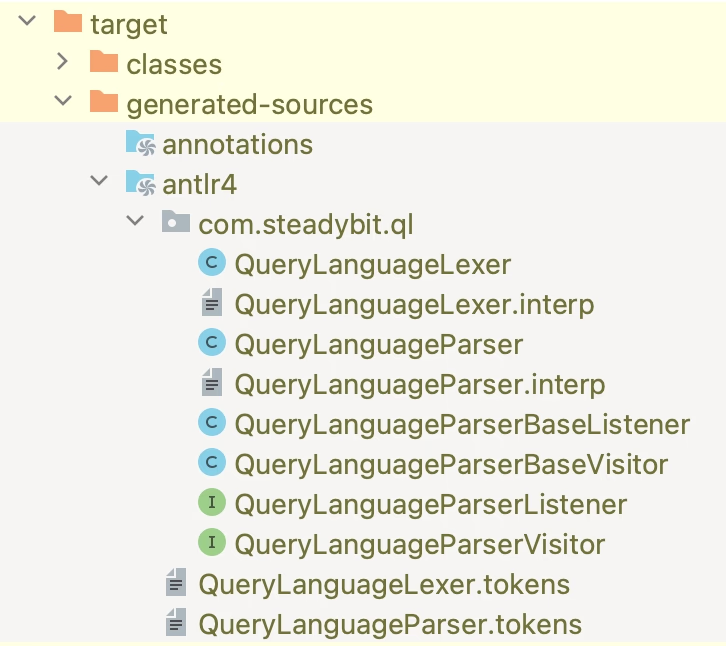

This Maven build plugin generates additional sources within the target directory, as shown in the picture below. You will learn how to use these generated sources in the next section.

As the last build step, we must construct a JavaScript lexer and parser implementation. You can also achieve this through the Maven build plugin (we do this from another Maven module that wraps the UI sources).

<plugin> <groupId>org.antlr</groupId> <artifactId>antlr4-maven-plugin</artifactId> <version>${antlr.version}</version> <executions> <execution> <id>antlr4</id> <goals> <goal>antlr4</goal> </goals> <phase>generate-resources</phase> </execution> </executions> <configuration> <visitor>true</visitor> <listener>true</listener> <sourceDirectory>../query-language/src/main/antlr4/com/steadybit/ql</sourceDirectory> <outputDirectory>src/queryLanguage/parser/generated</outputDirectory> <arguments> <argument>-Dlanguage=JavaScript</argument> </arguments> </configuration> </plugin>

Intermediate Summary

Let us quickly summarize what we got at this point before we carry on. We got a lexer implementation in both Java and JavaScript that can turn a character sequence into a token sequence (QueryLanguageLexer). A token sequence is still too low-level for us to work with. We also got a parser implementation in both languages that can turn the token sequence into a parse tree (QueryLanguageParser). Parse trees encapsulate a lot of the semantics of our query language, and we can work with these going forward. To aid development, ANTLR also generated a visitor interface (QueryLanguageParserVisitor) that we can implement to traverse the parse tree.

Usage in Java

What you do with the generated lexer and parser is up to you. Our main objective is to translate the generated parse tree into an object hierarchy compatible with the existing code base. To achieve this, we use the generated lexer, parser and visitor, as the code snippet below alludes.

package com.steadybit.ql; import com.steadybit.ql.model.Clause; import org.antlr.v4.runtime.CharStreams; import org.antlr.v4.runtime.CommonTokenStream; private QueryLanguageParser toParser(String query) { var input = CharStreams.fromString(query); var errorListener = new ErrorListener(); var lexer = new QueryLanguageLexer(input); lexer.addErrorListener(errorListener); var tokens = new CommonTokenStream(lexer); var parser = new QueryLanguageParser(tokens); // remove the default error to stderr listener parser.removeErrorListeners(); parser.addErrorListener(errorListener); return parser; } public Clause toClause(String query) { return toParser(query) .topLevelQuery() .accept(new QueryToClauseTransformationVisitor()); }

Usage in JavaScript

The nice thing about the generated ANTLR code is the API similarity across languages. The JavaScript API and the generated code’s usage are very similar to the Java variant. The following code snippet shows how you can combine the lexer, parser and visitor with the antlr4 module.

import antlr4 from "antlr4"; import QueryLanguageParser from "./generated/QueryLanguageParser"; import { QueryLanguageToTargetPredicateVisitor } from "./visitor"; import QueryLanguageLexer from "./generated/QueryLanguageLexer"; import { QueryLanguageErrorListener } from "./errorListener"; import { isParsingError, ParsingResult } from "./types"; export function toTargetPredicate(input: string): ParsingResult { try { const chars = new antlr4.InputStream(input); const lexer = new QueryLanguageLexer(chars); lexer.addErrorListener(new QueryLanguageErrorListener()); const tokens = new antlr4.CommonTokenStream(lexer); const parser = new QueryLanguageParser(tokens); parser.buildParseTrees = true; // remove the default error to console.error listener parser.removeErrorListeners(); parser.addErrorListener(new QueryLanguageErrorListener()); const tree = parser.topLevelQuery(); return ( tree.accept(new QueryLanguageToTargetPredicateVisitor()) ?? { operator: "AND", predicates: [], } ); } catch (e) { if (isParsingError(e)) { return e; } else { return { message: e.message, line: 1, column: 1, }; } } }

Conclusion

We saw how an existing query language could be used as a starting point for a new query language using ANTLR. This exercise results in a query language with parsers in both Java and JavaScript. This query language is now ready to be integrated with the rest of the product.

In a future article, we will dive deeper into the JavaScript parser usage, specifically how we integrated the parser with the Monaco editor!