The State of Chaos Engineering

Chaos Engineering has been around for several years, and the practice has evolved. Within this post, we will look at past and modern interpretations, industry opinions and what we believe to be critical for you to leverage the practice to reach your goals.

Chaos Engineering has always been about understanding your systems, learning their limits and verifying the truth to build confidence. This confidence is essential to improving, maintaining and operating systems.

Chaos Engineering is the discipline of experimenting on a system in order to build confidence in the system’s capability to withstand turbulent conditions in production. – Principles of Chaos Engineering

In the past, much focus was placed on the mechanics of chaos engineering to induce turbulent conditions. For example, how to stop containers, cause CPU spikes or simulate security incidents. At the same time, the industry tried to address misconceptions and uncatered needs through training and certification. For example, “Chaos Engineering is just about breaking things” is a common misunderstanding, and continuous learning and confidence verification was left unaddressed.

What Others are Saying

Experiment to ensure that the impact of failures is mitigated. […] However, our ability to fail over is complex and hard to test, so often the whole system falls over. – Adrian Cockcroft, former VP at AWS

Adrian Cockcroft and Yaniv Bossem gave a talk in 2021 in which they shared, among others, their belief that one should experiment – and continuously! The talk is a good starting point for understanding why experimentation (a foundational Chaos Engineering principle) is relevant.

Adrian Cockcroft mentions that fail-over scenarios are often among the most complex parts of the system – yet the least tested.

Most of the tooling today focuses on periodic events called ‘GameDays,’ which only give the developer a point- in-time reliability snapshot and understanding of their system. – Harness

Harness, a company providing another Chaos Engineering solution, is observing the same issues regarding continuous verification. Let us look at the following quote from Liz Fong-Jones to go into more detail.

Once you’ve done a chaos engineering experiment as a one-off, it’s tempting to say you’re done, right? Unfortunately, the forces of entropy will work against you and render your previous safety guarantees moot unless you continuously verify the results of your exploration. Thus, after a chaos engineering experiment succeeds once, run it regularly either with a cron job or by calendaring a repeat exercise. This fulfills two purposes: ensuring that changes to your system don’t cause undetected regressions, and the people involved get a refresher in their knowledge and skills. As an added third benefit, they can be a great on-call training activity, as well – Liz Fong-Jones, Field-CTO, Honeycomb

Liz Fong-Jones shared how Honeycomb started its chaos engineering journey, what misconceptions they faced, and what practices they established. One crucial need she shared is the need for continuous verification. In-frequent execution of experiments, e.g., as part of game days, does not account for modern systems’ rate of change or dynamism.

We believe that breaking systems is easy – especially with all the tooling built in the last years. However, the frequent & controlled introduction of turbulent conditions for learning and verification is not sufficiently addressed by many solutions – especially when rolling out Chaos Engineering practices across teams. Then, topics like role-based access control, trust, repeatability, blast-radius control, and so many more become important.

Open source projects were not able to meet our need for a tool easy to use and our need for out- of-the-box features and responsive support. I also wanted to add features and be able to advance on the vision of giving chaos engineering to our developer team. Steadybit allows us to add chaos engineering to the day-to-day life of developers and focus on what our users care about. – Antoine Choimet, Chaos Engineer, ManoMano

Antoine Choimet, a Steadybit customer, describes the desire to integrate Chaos Engineering practices into the day-to-day of ManoMano employees. Game days and similar special occasions cannot keep up with modern systems’ change rates.

Furthermore, ManoMano analyzed how they can extend open-source solutions. This finding is consistent with other customers’ needs and experiences.

A digital immune system combines a range of practices and technologies from software design, development, automation, operations and analytics to create superior user experience (UX) and reduce system failures that impact business performance. A DIS protects applications and services in order to make them more resilient so that they recover quickly from failures. – Gartner

In the last quarter of 2022, Gartner published an article and their technology trends in which they list “six prerequisites for a strong digital immune system”. A notable part of this is Chaos Engineering. Aside from the relative importance given to the practice, this also highlights how multi-faceted the problems and needs are. This a reality we see as well, e.g., with requests for bi-directional interaction between observability solutions and Steadybit.

Gartner goes on to state that organizations implementing these practices “will increase customer satisfaction by decreasing downtime by 80%”.

Key Capabilities of Modern Chaos Engineering Solutions

Reliability Testing

Modern solutions need to support some form of reliability testing. Reliability tests encode our assumptions about our system’s behavior under various non-ideal conditions. Through frequent execution, these reliability tests continuously verify the knowledge is up to date, find regressions and thus help us build confidence.

Examples of reliability tests are:

- Can our users continue shopping while a rolling update is happening?

- Will an additional Kubernetes node be started when the auto-scaler increases the replicas?

- Will our service start when service dependencies are unavailable?

It is easy to think of regular Chaos Engineering experiments as reliability tests. However, infrequently executed experiments are notably different from reliability tests. Reliability tests supported by modern solutions need to encode when they are safe to execute, when they should abort early, automatic roll-back and what constitutes a success and a failure.

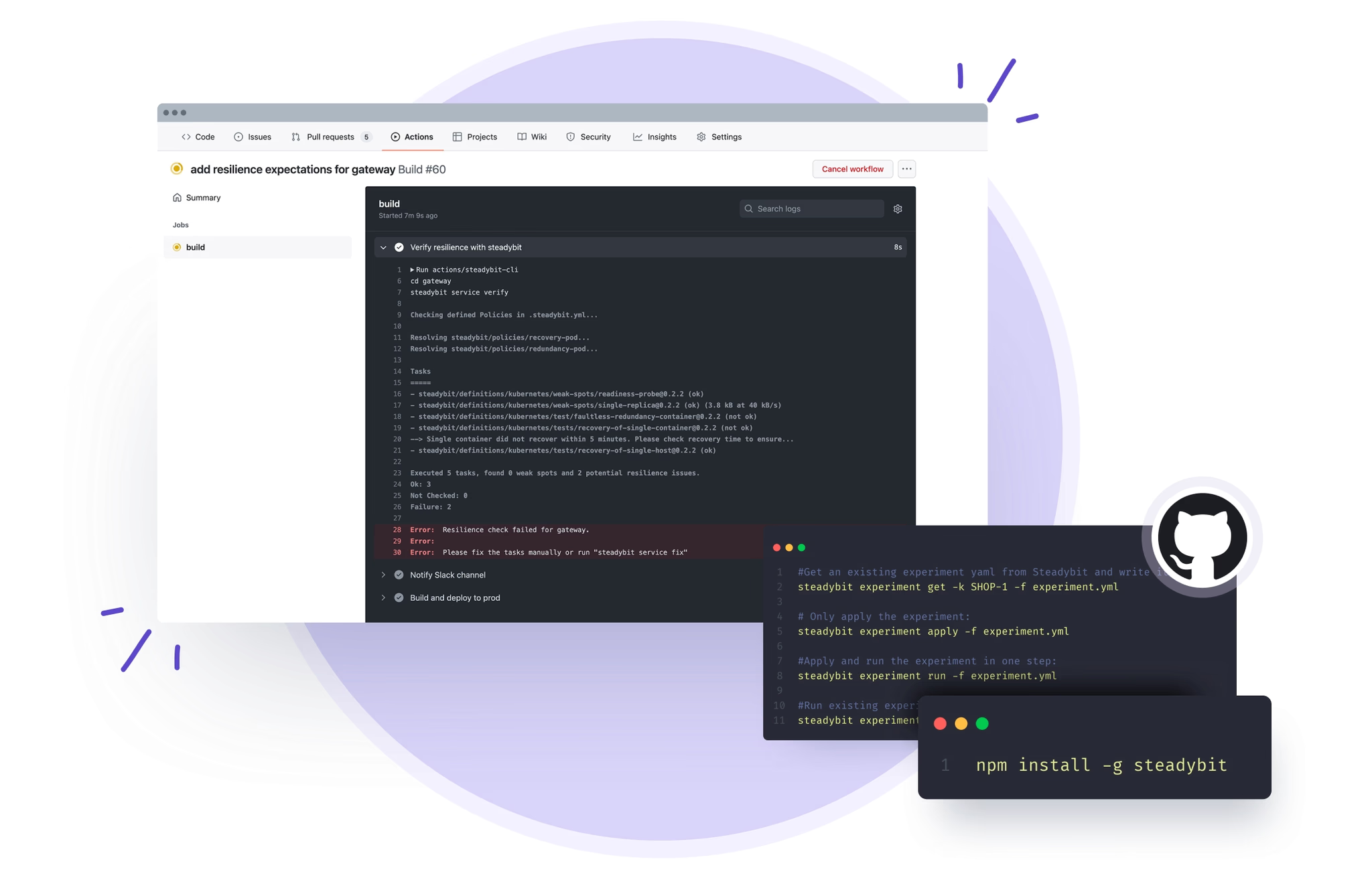

With Steadybit, you can leverage ready-made GitHub actions and the CLI to turn experiments into reliability tests!

Integration with Other Solutions

Organizations leverage a wide array of tools and products to address the challenges of modern systems – for example, observability, incident management, load testing, end-to-end testing or orchestration. A solution aiming to improve confidence in our systems needs to be able to interact with the other solutions.

Let’s take a look at some examples requiring such integrations:

- Does our observability solution identify the problems we have just caused?

- Execute our end-to-end tests to ensure the system works while a rolling update is in progress.

- Create a base load on our pre-production system using our load tests while executing a reliability test.

- Check that there is no open incident before, during or after a reliability test is executed.

- Wait for our Kubernetes deployment’s rollout to complete before continuing.

Modern Chaos Engineering solutions will natively support such integrations and offer extension points users can hook into to integrate with proprietary solutions.

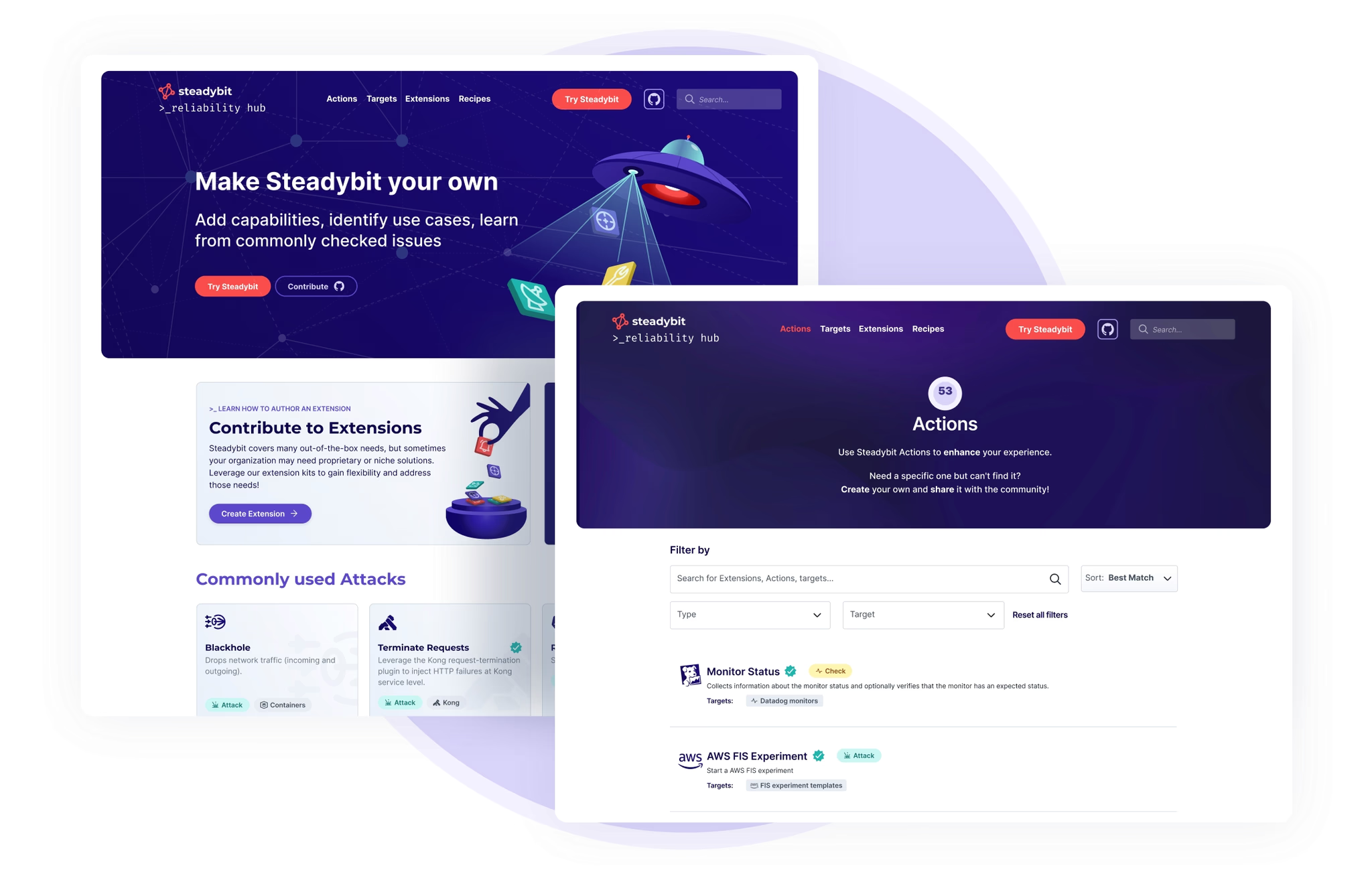

Integration and extensibility are cornerstones of Steadybit’s story. Leverage existing integrations into k6, JMeter, Datadog, and so many more out of the box. Or leverage our extensions kits to make Steadybit your own!

Sharing and Collaboration

Chaos Engineering and reliability testing are relatively new practices. Consequently, best practices and experience are only readily available in few organizations. To counteract this, one could turn to training and certification programs. However, that would require a larger time investment.

Modern solutions take alternative approaches. Through shared experiments and recipes that the community can contribute, organizations can learn and immediately apply best practices from those that came before them.

Such sharing and collaboration is beneficial to crafting reusable reliability tests that may otherwise be time intensive or result in inconsistent outcomes.

The image above shows Steadybit’s reliability hub in which capabilities are documented, extensions are shared, and learnings are encoded through recipes.

Great Usability

The introduction of turbulent conditions into systems comes with a natural risk. Nobody wants to cause an incident. For that reason, modern Chaos Engineering solutions must not only facilitate causing turbulent conditions, but they must do so transparently in a way that creates trust and confidence in their users. Among others, this means:

- Modern solutions put guardrails in place to avoid common pitfalls or to enforce team boundaries.

- They support a wide range of use cases without custom code.

- They communicate blast radius and impact clearly.

- Emergency stops and automatic rollbacks are standard.

- Facilitate quick experiments and modifications without committing to long-term support of these.

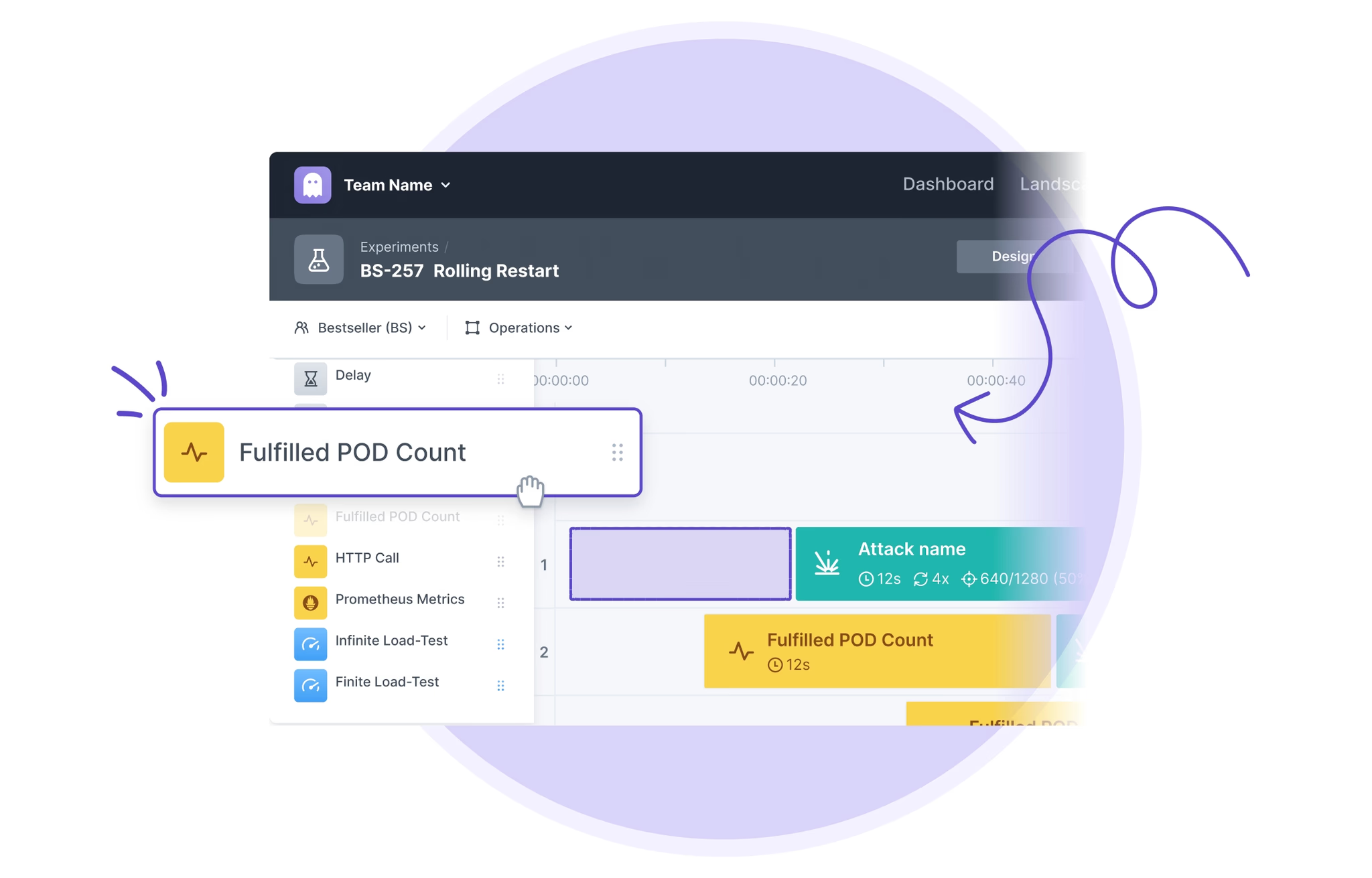

Steadybit makes the creation of experiments straightforward. There is no need to write custom code – drag and drop actions into a web-based editor to start!

Register for the Webinar

You can learn more through a webinar we are hosting on January 26! Join us for a session moderated by Kelsey Hightower from Google with speakers Daljeet Sandu from Datadog, and our customer Antoine Choimet from ManoMano!

Get started today

Full access to the Steadybit Chaos Engineering platform.

Available as SaaS and On-Premises!

or sign up with

Book a Demo

Let us guide you through a personalized demo to kick-start your Chaos Engineering efforts and build more reliable systems for your business!